Researchers, Patients, and Stakeholders Evaluating

Comparative-Effectiveness Research: A Mixed-Methods

Study of the PCORI Reviewer ExperienceThis section was compiled by Frank M. Painter, D.C.

Send all comments or additions to: Frankp@chiro.org

FROM: Value Health. 2018 (Oct); 21 (10): 1161-1167 ~ FULL TEXT

OPEN ACCESS Laura P. Forsythe, PhD, MPH • Lori B. Frank, PhD • Rachel Hemphill, PhDA

Tsahai Tafari, PhD • Victoria Szydlowski, BS • Michael Lauer, MD

Christine Goertz, DC, PhD • Steven Clauser, PhD

Patient-Centered Outcomes Research Institute (PCORI),

Washington, DC, USA.

lforsythe@pcori.orgOBJECTIVES: The Patient-Centered Outcomes Research Institute (PCORI) includes patients and stakeholders alongside scientists in reviewing research applications using unique review criteria including patient-centeredness and patient and/or stakeholder engagement. To support extension of this unique collaborative model to other funders, information from the reviewers on the review process is needed to understand how scientists and nonscientists evaluate research proposals together. Thus, this study aimed to describe reviewers' perspectives of the interactions during the in-person review panel; to examine the value and challenges of including scientists, patients, and stakeholders together; and to understand the perceived importance of PCORI's review criteria.

METHODS: This study utilized anonymous, cross-sectional surveys (N = 925 respondents from 5 funding cycles: 470 scientists, 217 patients, 238 stakeholders; survey completion rates by cycle: 70-89%) and group interviews (N = 18).

RESULTS: Reviewers of all types describe PCORI Merit Review as respectful, balanced, and one of reciprocal influence among different reviewer types. Reviewers indicate strong support and value of input from all reviewer types, receptivity to input from others, and the panel chair's incorporation of all views. Patients and stakeholders provide real-world perspectives on importance to patients, research partnership plans, and study feasibility. Challenges included concerns about a lack of technical expertise of patient/stakeholder reviewers and about scientists dominating conversations. The most important criterion for assigning final review scores was technical merit-either alone or in conjunction with patient-centeredness or patient/ stakeholder engagement.

CONCLUSIONS: PCORI Merit Reviewers' self-reports indicate that the perspectives of different reviewer types are influential in panel discussions and Merit Review outcomes.

Keywords: comparative-effectiveness research, Patient-Centered Outcomes Research Institute (PCORI), patient/stakeholder engagement, peer review, research proposal review

From the FULL TEXT Article:

Introduction

The Patient-Centered Outcomes Research Institute (PCORI) funds comparative clinical effectiveness research (CER) that helps patients and caregivers make informed health care decisions. [1] Health research funders typically rely exclusively on scientists to assess the quality and impact of potential research projects. Although much of PCORI’s research application and Merit Review process is similar to that of other major clinical research funders [2], PCORI Merit Review is unique because of extensive involvement of patients and other health care stakeholders (e.g., clinicians, health systems, payers) as primary reviewers of applications for research funding. [3] Including patients and stakeholders, the ultimate end-users of research information, in review of research applications has been proposed as one way to enhance the usefulness of evidence to decision makers and policymakers. [4–6] Other clinical research funders include patients or consumers [6–11], but the extent to which PCORI involves nonscientists in review is distinct in both number and level of involvement. PCORI also uses a unique set of review criteria. [12] In addition to technical merit, importance of the research question, and likelihood of the research to improve health care and outcomes (criteria common to clinical research funding review [13]), PCORI requires patient-centeredness and engagement of patients and/or other stakeholders in the planning and conduct of the research.

Little evidence is available about how nonscientists can be involved in research application review or the effects of this involvement on the review process. A growing literature quantitatively examining scientific review outcomes suggests that scientist, patient, and stakeholder views all play an important role in PCORI application scoring and funding decisions. [2, 14] However, a mixed-methods approach can enhance evaluation of this unique collaborative model. Qualitative assessments of the collaborative merit review process supplement quantitative findings about use of review criteria by different reviewer types and about impact on funding decisions (including in a companion article by Forsythe et al. [2]).

Post-review surveys and focus groups conducted by several funders have suggested that scientists and patients/consumers generally feel that an inclusive approach to research application review provides an important perspective regarding what matters to patients [8, 14], facilitates reciprocal learning, and allows the best science to be funded, even when effects of consumer reviewers on final review scores are limited. [15, 16] Several challenges have been identified by reviewers, including ensuring adequate preparation for reviewers, defining the optimal role for nonscientist reviewers in evaluating scientific rigor, and perceived hierarchy among different reviewer types. [11, 14, 15] Prior work is limited by small sample sizes, data from single review cycles, and much more limited involvement of patients or other stakeholders (e.g., one or two advocate reviewers on a review panel) relative to PCORI’s process. More robust information is needed to adequately understand reviewers’ views of inclusive review approaches in the context of PCORI’s highly collaborative model.

The objective of this article is to address three main research questions: (1) What are Merit Reviewers’ perceptions and experiences regarding inclusion of patients and stakeholders in the Merit Review process? (2) What were the experiences of patients, stakeholders, and scientists in the Merit Review panels? (3) What were Merit Reviewers’ experiences applying criteria?

PCORI Review Process

In this examination, applications to Broad and Targeted PCORI Funding Announcements (PFAs) were assigned for review by two scientists, one patient, and one stakeholder. Review cycles are denoted by month and year of in-person panel meeting; see Supplemental Digital Content 1 for cycle timing. [2] “Patients” include those with or at risk of a condition, unpaid caregivers to someone affected by illness, and those serving in a patient advocacy role. “Stakeholder” reviewers include clinicians, health systems, purchasers, payers, industry, research, policy makers, or staff from clinical training institutions. [17] Using an online scoring form [18], reviewers provided written critiques of application strengths and weaknesses, and assigned preliminary overall and criteria scores on a scale from 1 (Exceptional) to 9 (Poor). Scientists were required to score all criteria, while patient and stakeholder reviewers were only required to score only potential to improve care, patient-centeredness, and engagement. The top scoring applications (approx. 50%, based on average preliminary overall scores) were discussed at in-person panel meetings with a 2:1:1 ratio of scientists, patients, and other stakeholders, respectively, with primary reviewers of each role speaking about the application. Scientist panel chairs facilitated and summarized the discussion. After discussion, all panel members provided a final overall score for every application discussed. Funding recommendations were made by PCORI staff and finalized by the PCORI Board of Governors based on Merit Review feedback, portfolio balance, and programmatic fit.

Methods

Design

A mixed method design was implemented with quantitative and qualitative analysis of reviewer views. [19] Approval was obtained from the MaGil Institutional Review Board (IRB). This article follows relevant reporting guidelines for observational studies and qualitative research. [20, 21]

Sample and Data CollectionReviewer survey An optional, anonymous web-based survey was administered to all reviewers after each review cycle. Email invitations were sent immediately following the in-person meeting, followed by up to three reminders over the subsequent 2 weeks. Because the survey was offered after each cycle, reviewers who served for multiple cycles may be represented multiple times in the data. Demographic information was not collected.

Table 1 Reviewer panels align with PCORI’s five program areas and special one-time funding opportunities Table 1. The sample excludes specific PCORI application review that used different reviewer models. Data from closed-ended items cover the five recent review cycles using common review criteria and processes (in-person review meetings occurring between November 2013 and August 2015; see Supplemental Digital Content 1). The qualitative analysis includes four of these review cycles.

Group Interview Semistructured group interviews (60-minute teleconferences) were conducted with reviewers after the August 2013 cycle, to obtain in-depth input and to aid interpretation of survey data primarily to inform process improvements. Interviews were led by LPF and LBF (PhD trained psychologists). Participants were informed that the interviewers were PCORI staff with no responsibility or oversight for the review process. Interviewers recorded field notes; group interviews were audio-recorded and transcribed (one lost as a result of recording error). Scientists and patients/stakeholders were interviewed separately to facilitate openness regarding different reviewers’ experiences. Given the purpose of these groups and practical guidelines for determining the appropriate number of groups [22], two groups were conducted for each category of reviewers, with a goal of 5 to 10 participants each to facilitate group interaction. Participants were identified using a combination of purposive sampling (by reviewer type and PCORI review experience) and random sampling. Four teleconferences were held within a month of the in-person panel discussion: two for scientists (one transcript lost as a result of recording error; for the remaining group n = 5) and two for patient and other stakeholder reviewers (total N = 13).Measures

Reviewer survey Items were developed by PCORI staff to measure constructs relevant to PCORI Merit Review: receptivity to input from other reviewer types (two items), influence on final review scores (one item), perceptions of panel chairs’ inclusion of all viewpoints (two items), perceived value of input from each reviewer type (two items), perceptions of funding applications that matter to patients and stakeholders and that are methodologically rigorous (two items), and perceived importance of each Merit Review criteria to reviewers’ final scores (five items). Whenever possible, items were adapted from existing questionnaires. [23, 24] Each item was evaluated for clarity and consistency. [25] A 5-point Likert agree scale was used for response options. See Supplemental Digital Content 2.

Open-ended items collected feedback on PCORI’s process for including scientist, patient, and stakeholder reviewers; the value of different types of reviewers; and reviewers’ views of the most important factor(s) in determining final scores. In addition, every survey included one or more generic open-ended items for additional feedback about Merit Review. Open-ended items permitted more detailed information to explain closed-ended responses.

Group Interviews The semistructured interview guide was organized around the following domains: general feedback, training for PCORI Merit Review, review criteria and applications, group interactions, and opportunities for improvement (Supplemental Digital Content 3).Analysis

Descriptive statistical analyses (frequencies, proportions) of quantitative survey data were performed using Stata Statistical Software: Release 13 and based on the sampling approach and research questions, were stratified by review cycle and reviewer type. Content analysis of all open-ended survey responses and group interview transcripts was conducted using NVivo qualitative data analysis software Version 10. The analysis was guided by the three main a priori research questions noted in the introduction. A codebook was developed around these questions and refined by reviewing the open-text responses and interview transcripts. Data were thematically coded by two coders. Disagreements were resolved through consensus; intercoder agreement was 90% or higher for each code. [26] Themes were compared across key characteristics of the data, such as the review cycle, reviewer type, and reviewer experience (new or returning), but no differences emerged. Explanatory contribution of open-ended responses to quantitative findings was examined throughout.

Results

Across five cycles of Merit Review for PCORI’s Broad and Targeted funding, there were 960 survey respondents (Table 1). By cycle, total survey sample sizes ranged from 113 to 266 and survey completion rates ranged from 70% to 89%. Thirty-five respondents from panels that used different reviewer models were excluded, resulting in a total study sample of N = 925.

Interactions in the In-Person PanelQuantitative results Most reviewers agreed that scientists were receptive to input from patient and stakeholder reviewers (Supplemental Digital Content 4). Across review cycles, there was consistent agreement among scientist reviewers about their receptivity to input from patient and stakeholder reviewers: more than 95% of scientist reviewers agreed, with more than 75% of scientists indicating “strong” agreement; more than 85% of patients and stakeholders agreed, with 65% or more reporting “strong” agreement. Similarly, total agreement that patients and stakeholders were receptive to input from scientist reviewers was high (>85%) across all reviewer types and cycles. Between 63% and 75% of scientists, between 71% and 91% of patients, and between 74% and 100% of stakeholders reported “strong” agreement. Total agreement that final scores were influenced by input from other reviewers exceeded 80% across all reviewer types and all five cycles (% “strongly agree” ranged across cycles as follows: scientists: 40–53%; patients: 31–45%; stakeholders: 43–58%) (Supplemental Digital Content 5).

Agreement was high that panel chairs ensured that all viewpoints were heard and that chair’s summaries of the discussion included all reviewer perspectives (>90% agreement in all cycles and across all reviewer types, with >70% reporting “strong” agreement).

Qualitative results Two important themes emerged from the open-ended feedback, supplementing inferences from survey ratings: equality of all review types and reciprocal influence. First, reviewers indicated that all reviewer types were “equal partners” and that discussions were balanced. For example, one stakeholder reviewer (February 2015 cycle) noted that “[Patients] provide a perspective that is different from but equal to that of the scientists; they provide a perspective from the lived experience of the patient.” The in-person discussion was also characterized as respectful. For example, a patient reviewer (August 2014) said it “provides opportunity for all reviewers to bring important points to the discussion. All discussions have been professional and respectful.” Similarly, a scientist reviewer (August 2014) noted that “Our group of reviewers was respectful of each other and of the process. It was clear that everyone had done a tremendous amount of work to prepare for the meeting.”

Second, reviewers reported that all reviewer types influenced one another. For example, a patient reviewer (August 2014 cycle) described the process saying “Together the reviewers build a comprehensive analysis of the application, which incorporates all perspectives.” A scientist reviewer (August 2014 cycle) reported, “In many cases, a different perspective from a patient or stakeholder reviewer caused me to reconsider the relative merit or weakness of one or more aspects of a study. In addition, there were a few cases when another scientific reviewer brought a different view that influenced my thinking.” Further, a stakeholder reviewer (May 2014 cycle) indicated that “Listening to other scientific reviewers explain the merits and likelihood of an application achieving its goals greatly affected my overall score.”Value of Including Scientists, Patients, and Stakeholders in Merit Review

Quantitative results Agreement that scientist reviewers provided valuable input exceeded 90% in each cycle and all reviewer types (Supplemental Digital Content 6). Across review cycles, at least 78% of scientists, 82% of patients, and 79% of stakeholders reported they “strongly agree” that scientists provide valuable input. Similarly, more than 90% of all reviewer types across all cycles agreed that patient and stakeholder reviewers provided valuable input. At least 62% of scientists, 82% of patients, and 76% of stakeholders reported “strong” agreement that patient and stakeholder reviewers provided valuable input.

Most reviewers agreed that PCORI’s use of panels comprising scientists, patients, and other stakeholders helped to ensure that selected proposals address questions important to patients and stakeholders (Supplemental Digital Content 7). Across cycles, “strong” agreement was reported by more than 75% of both patient and stakeholder reviewers and more than 60% of scientists. Although most reviewers also tend to agree that this approach to application review helped ensure that selected proposals are methodologically rigorous, agreement for this item was less robust: across cycles between 61% and 87% of patient and stakeholder reviewers and between 50% and 61% of scientists reported “strong” agreement.

Qualitative results Themes from open-ended responses provide explanations for the ways in which input from different types of reviewers was valuable. Reviewers described the most important contributions of patient and stakeholder reviewers as evaluating patient-centeredness and engagement, as well as providing real-world perspectives, particularly related to study feasibility and implementation. Several quotes exemplify these points:“Patients and stakeholders were able to express practical aspects to the proposed studies—for example, to say whether expectations of study participants or clinicians were realistic.”

— Patient reviewer, Fall 2014 cycle

“They are often able to identify ‘blind spots’ in the proposals as they relate to patient engagement and patient-identified outcomes.”

— Patient reviewer, Fall 2014 cycle

“They are able to really look carefully at patient and stakeholder input and also very helpful in validating if the outcomes are truly patient centered or not.”

— Scientist, Fall 2014 cycle

“Helped to illustrate whether the application was truly able to be successfully completed in the real world.”

— Stakeholder reviewer, Fall 2014 cycleChallenges for Including Scientists, Patients, and Stakeholders in Merit Review

In open-ended feedback, a small proportion of reviewers reported concerns about integrating scientists, patients, and stakeholders in application review. Fewer than 10 patient and stakeholder reviewers (across all cycles) indicated concern about scientists dominating the conversation. Of the approximately 20 reviewers who commented on the theme of patient and stakeholder reviewers lacking technical expertise, one third were patients or stakeholders and two thirds were scientists. Scientists contributed all but one of the comments (<10) regarding patient and stakeholder reviewers having a narrow focus on patient-centeredness and engagement.

Importance of PCORI Merit Review Criteria

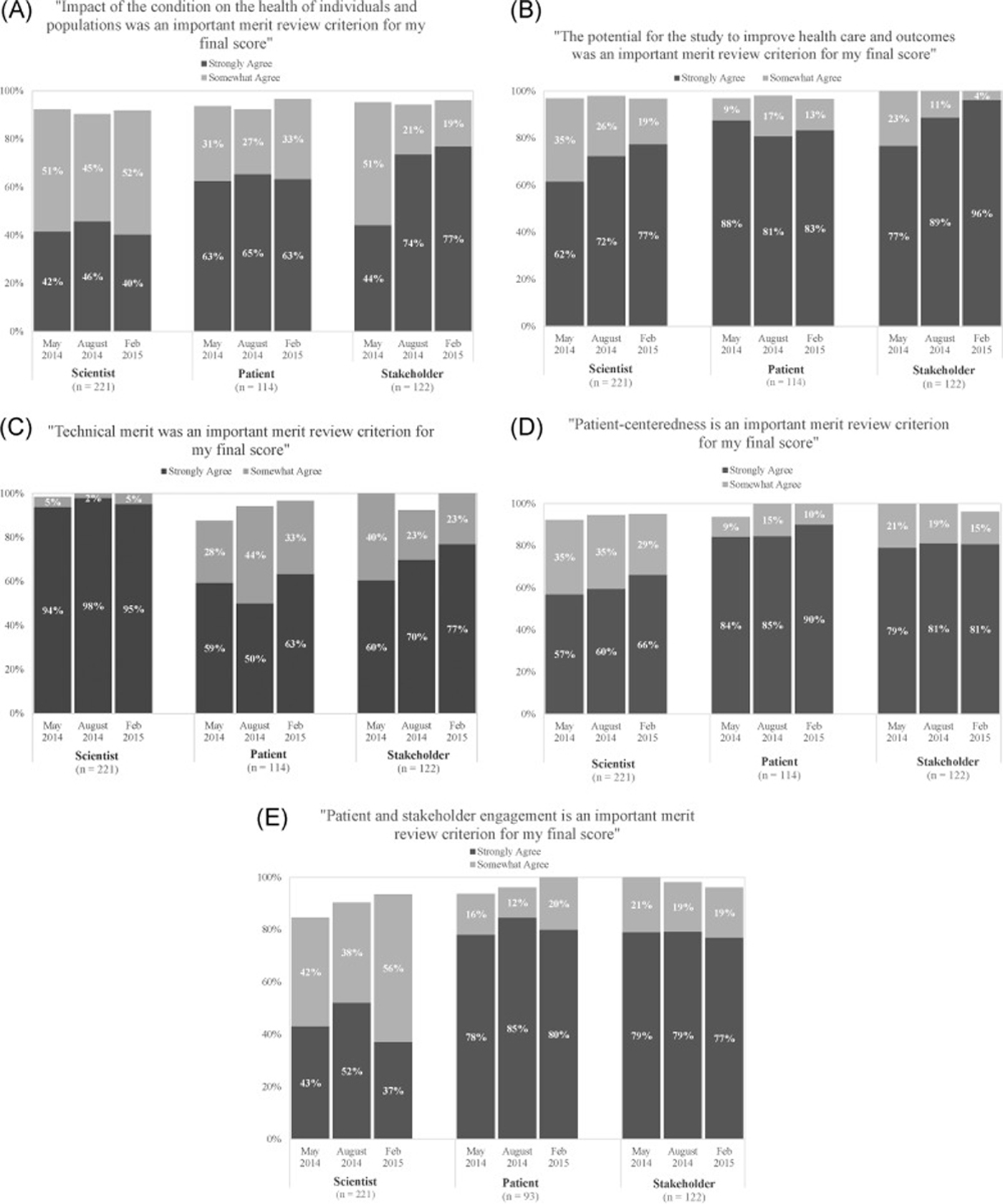

Figure 1 Quantitative results Nearly all reviewers agreed “somewhat” or “strongly” that each of the five criteria were “very important” to their final score (Figure 1). Scientists reported the strongest agreement about the importance of technical merit (>90% “strongly agree” in all cycles). Both patients and stakeholders reported the strongest agreement regarding importance of three review criteria: the potential to improve health care and outcomes, patient-centeredness, and patient and stakeholder engagement (“strong” agreement was near or exceeded 80% for each of these criteria in all cycles). Responses were similar across cycles, with some notable time trends across three review cycles: increases in “strong” agreement among scientists (from 62% in May 2014 to 77% in February 2015) and stakeholders (from 77% to 96%) regarding the importance of the review criterion of potential to improve health care and outcomes, increases in “strong” agreement among stakeholders (60% to 77%) on the importance of technical merit, and increases in agreement among scientists on the importance of engagement (from 85% to 94%), although “strong” agreement was lower for this criterion among scientists compared to patients and stakeholders.

Qualitative results Three themes emerged regarding the most important factors informing reviewers’ overall scores: the primacy of technical merit, the joint importance of patient-centeredness and the potential to improve health care and outcomes, and innovation.First, across all reviewer roles, the most common theme was that aspects of technical merit — either alone or in conjunction with patient-centeredness or patient and stakeholder engagement — was the most critical factor underlying assignment of scores. For example, one patient reviewer (November 2013 cycle) noted, “It’s the technical merit that prevails because if you cannot perform the study then the money is poorly spent.” In addition, one scientist reviewer (February 2015 cycle) said, “Having a technically strong proposal is necessary for being considered for funding, but not sufficient. Strongly scored proposals ALSO need to include patients, caregivers, and stakeholder’s perspectives and needs from the inceptions of the project all the way through the dissemination process.” This theme further supports the quantitative ratings of the importance of technical merit (Fig. 1E).

A second theme centered on the relationship between criteria. Specifically, the combination of two criteria, patient-centeredness and potential to improve health care and outcomes, were important to the overall score. A patient reviewer (November 2013 cycle) noted that the most important factor(s) in determining his/her overall scores was “the degree to which a proposal was truly patient-centered and offered real promise to improve health care systems.”

Third, innovation was commonly cited as an important factor, typically in combination with other factors, such as described by a stakeholder reviewer (August 2014 cycle): “The balance between innovative ideas, good science, and what would ultimately serve the patient and caregiver(s) the best [are the most important factors influencing overall scores].” Although many reviewers indicated that technical merit was the single most important factor determining their score, most respondents mentioned more than one factor as important, highlighting the contribution of multiple criteria to application evaluation.

In addition to themes about criteria importance, some reviewers expressed difficulty understanding or applying the criteria. In particular, several reviewers commented on the difficulty of distinguishing patient-centeredness and patient and stakeholder engagement. A patient reviewer (August 2014 cycle) “had some confusion when deciding to call out strengths/weaknesses in patient-centeredness or engagement. For example, if an application engaged patients to get at patient-centered outcomes, would I highlight that under patient-centeredness, or patient engagement, or both?”

Conclusions

Inclusion of patients and other health care stakeholders in review of research applications offers promise for facilitating research that will better inform health care decisions and policymaking. This study is the first to provide in-depth information across multiple review cycles about scientist, patient, and stakeholder views on and experiences with PCORI’s uniquely collaborative review. These findings can guide collaborative review by other funders.

This study offers novel information about how different types of reviewers interact and contribute to review of research proposals. All reviewer types describe PCORI Merit Review as respectful, balanced, and one of reciprocal influence, and note success with the chair’s role eliciting and incorporating all views. The most important contributions of patient and stakeholder reviewers noted were providing input on whether studies are important to patients, have appropriate plans for partnering with patients in conducting the study, and can realistically be implemented. This study is consistent with findings from other surveys of reviewers [8, 15], albeit those from less involvement of nonscientists, and supports analyses of review scores [2] that quantify the influence of different reviewer types on PCORI Merit Review outcomes. This evidence supports the feasibility of the process, with nonscientist reviewers respectfully and meaningfully incorporated into research application review. The findings provide the critical foundational information needed to support implementation of collaborative merit review in other contexts.

Assignment of the overall final score for research applications is a complex, multifaceted process. Although reviewers of all types tend to agree that each of the criteria are very important to their final overall scores, in more recent cycles stakeholder reviewers are increasingly endorsing the importance of technical merit and scientists are increasingly endorsing the importance of patient and stakeholder engagement. These trends may be due in part to returning reviewers’ repeated exposure to the PCORI review process, formal training, and interaction with other reviewers, and/or a growing acculturation of both types of reviewers to the other group’s primary focus. Although ratings of importance do not necessarily correspond to “weighting” of criteria in assigning a final score, the current findings support quantitative score data on the importance of technical merit and aspects of patient-centeredness and likelihood of improving care in PCOR. [2] The difficulty reported by some reviewers in distinguishing between the patient-centeredness and research engagement criteria reflects discussions in the field more broadly. [27] The feedback contributed to refinements in PCORI’s reviewer training, ensuring definitions of merit review criteria are clearly communicated. The findings also led to further refinement of the criteria themselves, to improve clarity about the distinction between patient-centeredness and patient and stakeholder engagement and to improve communication about the concept of patient-centeredness. Specifically, the descriptors used to guide reviewers were revised to provide more guidance on “meaningful” patient-centeredness and to improve focus of the stakeholder engagement criterion.

A small number of reviewers expressed concerns about the PCORI review process that are important for understanding the quality of resulting reviews. In particular, a few reviewers expressed concern about limitations in the methodological expertise of nonscientist reviewers and an overemphasis on the patient-centeredness or patient and stakeholder engagement criteria by nonscientist reviewers. Quantitative merit review score analyses indicating the predominance of scientist ratings of technical merit in final overall scores [2] mitigate these quality concerns. Other concerns expressed by a small number of reviewers about dominance of scientists relative to other reviewers in discussion require further attention. PCORI uses the survey results to assess and refine training for all reviewer types, with periodic changes to training of reviewers as well as panel chairs, aimed at facilitating rigorous and fair evaluation of applications and inclusion of all perspectives in the panel discussion. Respondents’ views regarding the collaborative review process were largely positive, However, it is important to note that feedback from reviewers on other topics related to Merit Review is more mixed, highlighting concerns and opportunities for improvement, such as burden and time commitment of the review process and needs for additional or improved reviewer training and resources. Although these specific findings are beyond the scope of this article, they provide important context that reviewers were not as uniformly positive about all aspects of the review process as they were regarding the inclusive approach.

This study has several limitations. Although surveys were anonymous, respondents may overemphasize positive experiences because the survey is conducted by PCORI. Although anonymity reduces the likelihood of response bias, it limits subgroup analyses by reviewer experience and other characteristics. Despite high survey response rates (>70% for all cycles), responses may not be representative of all reviewer views. Differences between respondents and nonrespondents are unknown, and reviewers with especially positive or especially negative experiences may have been more likely to respond to the survey. Repeat reviewers, whose views may differ from those of one-time reviewers, may be overly represented in the responses. The directionality of the collective effects of these limitations on study findings is unknown. Group interviews were intended to provide additional depth of understanding. Full thematic saturation may not have been reached, however, and interviewees’ views may not generalize to all reviewers.

Research have called for evaluation of the review process effects on quality and impact of funded research. [28] In the absence of experimental designs, mixed methods enhance understanding about the unique collaborative approach to application evaluation. This study, along with quantitative score data demonstrating that ratings from all reviewer types are associated with review scores and funding decisions [2], suggest that PCORI’s process identifies research applications perceived as relevant and important by patients and health care stakeholders, as well as scientists. Ultimately, PCORI seeks to determine the extent to which the patient-centered approach leads to distinct impact in terms of usefulness of research findings for multiple health care decision makers; speed of uptake of results in decision making; and effect on the quality of health decisions, health care, and health outcomes. Given increasing interest in engagement of consumers in clinical research, these results can inform engagement in research application review by other funders.

Supplementary material

Figure S1

Figure S2

Figure S3

Figure S4

Figure S5

Figure S6

Figure S7

Figure S8

Figure S9Acknowledgments

PCORI thanks everyone who has served as a Merit Reviewer for their time and invaluable input on research applications. The authors also gratefully acknowledge Jennifer Huang, PhD, and Paula Darby Lipman, PhD, for their contributions to the qualitative analysis of open-ended responses to the PCORI Reviewer Survey; Mary Jon Barrineau, PhD, for her contributions to the descriptive analysis; Krista Woodward, MSW, MPH, for her management of relevant literature and development of figures; and Lauren Fayish, MPH, for her guidance on developing the graphs and figures in this article. PCORI provided funding for this work.

References:

Compilation of Patient Protection and Affordable Care Act:

patient-centered outcomes research and the authorization of the Patient-Centered Outcomes

Research Institute. Subtitle D. Title VI. Sec 6301. 2010. Available from:

https://www.pcori.org/sites/default/files/PCORI_Authorizing_Legislation.pdf

[Accessed May 29, 2018].Forsythe LP, Frank LB, Tafari AT, et al.

Unique review criteria and patient and stakeholder reviewers: analysis of PCORI’s approach

to research funding.

Value Health. http://dx.doi.org/10.1016/j.jval.2018.03.017.Selby, J.V., Forsythe, L., and Sox, H.C.

Stakeholder-driven comparative effectiveness research: an update from PCORI.

JAMA. 2015; 314: 2235–2236Entwistle, V.A., Renfrew, M.J., Yearley, S. et al.

Lay perspectives: advantages for health research.

BMJ. 1998; 316: 463–466Kotchen, T.A.S. and Spellecy, R.

Peer review: A research priority. 2012;

https://www.pcori.org/assets/Peer-Review-A-Research-Priority11.pdfYoung-McCaughan, S., Rich, I.M., Lindsay, G.C., and Bertram, K.A.

The Department of Defense Congressionally Directed Medical Research Program:

innovations in the federal funding of biomedical research.

Clin Cancer Res. 2002; 8: 957–962National Institute for Health Research.

http://www.nets.nihr.ac.uk/become-a-reviewer

Become a reviewer.

Evaluation, trials, and studies. 2016;Monahan, A. and Stewart, D.E.

The role of lay panelists on grant review panels.

Chron Dis Can. 2003; 24: 70–74Susan, G.

Komen for the Cure. Advocates in Science Steering Committee.

Ensuring the patient voice is heard. 2016;

https://ww5.komen.org/ResearchGrants/KomenAdvocatesinScience.htmlAgnew, B.

NIH invites activists into the inner sanctum.

Science. 1999; 283: 1999–2001Gilkey, M.B.

Supporting cancer survivors’ participation in peer review: perspectives from

NCI’s CARRA program.

J Cancer Surviv Res Pract. 2014; 8: 114–120Patient-Centered Outcomes Research Institute.

Merit Review Criteria. 2014;

http://www.pcori.org/funding-opportunities/merit-review-process/merit-review-criteriaNational Institute of Health.

Review Criteria at a Glance. 2016;

https://grants.nih.gov/grants/peer/guidelines_general/Review_Criteria_at_a_glance-research.pdfFleurence, R.L., Forsythe, L.P., Lauer, M. et al.

Engaging patients and stakeholders in research proposal review: the patient-centered

outcomes research institute.

Ann Intern Med. 2014; 161: 122–130Andejeski, Y., Breslau, E.S., Hart, E. et al.

Benefits and drawbacks of including consumer reviewers in the scientific

merit review of breast cancer research.

J Womens Health Gend Based Med. 2002; 11: 119–136Andejeski, Y., Bisceglio, I.T., Dickersin, K. et al.

Quantitative impact of including consumers in the scientific review of breast cancer

research proposals.

J Womens Health Gend Based Med. 2002; 11: 379–388Patient-Centered Outcomes Research Institute.

http://www.pcori.org/get-involved/review-funding-applications/reviewer-resources/

reviewer-faqs#general

[Accessed May 29, 2018])

Reviewer FAQs. 2015;Hickam, D.T., Berg, A., Rader, K. et al.

The PCORI Methodology Report.

Patient-Centered Outcomes Research Institute, Washington, DC; 2013Creswell, J.W.

Research Design: Qualitative, Quantitative, and Mixed Methods Approaches. 2nd ed.

SAGE, Thousand Oaks, CA; 2003Tong, A., Sainsbury, P., and Craig, J.

Consolidated criteria for reporting qualitative research (COREQ):

a 32-item checklist for interviews and focus groups.

Int J Qual Health Care. 2007; 19: 349–357von Elm, E., Altman, D.G., Egger, M. et al.

The Strengthening the Reporting of Observational Studies in Epidemiology

(STROBE) statement: guidelines for reporting observational studies.

Ann Intern Med. 2007; 147: 573–577Carlsen, B. and Glenton, C.

What about N? A methodological study of sample-size reporting in focus group studies.

BMC Med Res Methodol. 2011; 11: 26Office of Extramural Research.

Enhancing Peer Review Survey Results Report.

National Institutes of Health, Bethesda, MD; 2010Office of Extramural Research.

Enhancing Peer Review Survey Results Report.

National Institutes of Health, Bethesda, MD; 2013Willis, G.B.L. and Lessler, J.T.

Question Appraisal System QAS-99.

Research Triangle Institute, Rockville, MD; 1999Miles, M.B. and Huberman, A.M.

Qualitative Data Analysis: An Expanded Sourcebook.

SAGE, Thousand Oaks, CA; 1994Frank, L., Basch, E., and Selby, J.V.

Patient-Centered Outcomes Research I. The PCORI perspective on patient-centered

outcomes research.

JAMA. 2014; 312: 1513–1514Lauer, M.S. and Nakamura, R.

Reviewing peer review at the NIH.

N Engl J Med. 2015; 373: 1893–1895

Return to RESEARCH ARTICLES

Since 1-08-2006

| Home Page | Visit Our Sponsors | Become a Sponsor |

Please read our DISCLAIMER |